How I used the MdM-DTIC Open Science Award for advancing my PhD research

I was awarded with the María de Maeztu DTIC-UPF Open Science Award in the 6th Doctoral Student Workshop organised by Department of Information and Communication Technologies of UPF in 2018. This award is given to pre-doctoral researchers that give outstanding effort in reproducibility and visibility of their research.

Award winners at the 6th UPF-DTIC Doctoral Student Workshop

In this post, I will describe how I used the prize of 1000 EUR that I received to further advance my research. (This post originally was posted in the DTIC-MdM blog.)

I have used the 2018 MdM Open Science award that I received in the DTIC 6th Doctoral Student Workshop for the collection of a prosodically annotated parallel speech audio dataset called Heroes Corpus.

A part of my thesis involves building of a speech translation pipeline to be used in automatic dubbing. I research on the reasons and ways to include prosodic characteristics (intonation, rhythm and stress) of the speakers’ and actors’ speech into this scenario. Developing such a pipeline requires parallel data, for training the translation model; and speech data since prosody is taken into account.

Speech data production is a highly costly process that involves script preparation, speaker selection, recording and annotation. Due to the inaccessibility of such an approach, I developed a framework to exploit dubbed media material which readily contains parallel and prosodically rich speech. The framework that I call movie2parallelDB provides an automation for this process by taking in audio tracks and subtitles of a movie and outputting aligned voice segments with text and prosody annotation. Movie2parallelDB is made available as an open source software in Github.

The framework requires correctly labelled subtitles for both original and dubbed version of the movie. Although this is easy to find in the original language of a movie, it is often not the case in the dubbed language. Practical differences in the dubbing process and subtitling process leads to non-matching audio and transcripts and in turn hinders automatic extraction of the speech data. This means that a manual process needs to be followed to correct subtitles to match the dubbing script.

I decided to use a TV series called Heroes for this work as its Spanish subtitles were close enough to its Spanish dubbing scripts. Timing and transcript corrections were done for 21 episodes by two interns, Sandra Marcos and Laura Gómez. A part of the prize was used to finance their working hours.

The corpus extraction methodology that was developed during the process and the resulting corpus were presented in the Iberspeech 2018 conference.

The resulting Heroes corpus contains 7000 parallel English and Spanish single speaker speech segments. Audio segments are accompanied with subtitle transcriptions and word-level prosodic/paralinguistic information. It is made openly accessible through the UPF e-repository.

The rest of the prize was used to fund my final year registration fees and also a tutorial on “Educational dialog systems tutorial” that I attended in Interspeech 2019 in Hyderabad, India.

I am very grateful to have had this boost to my PhD work by the Maria de Maeztu programme and hope that it has further positive repercussions in the speech and translation research community through the resources that were made openly available.

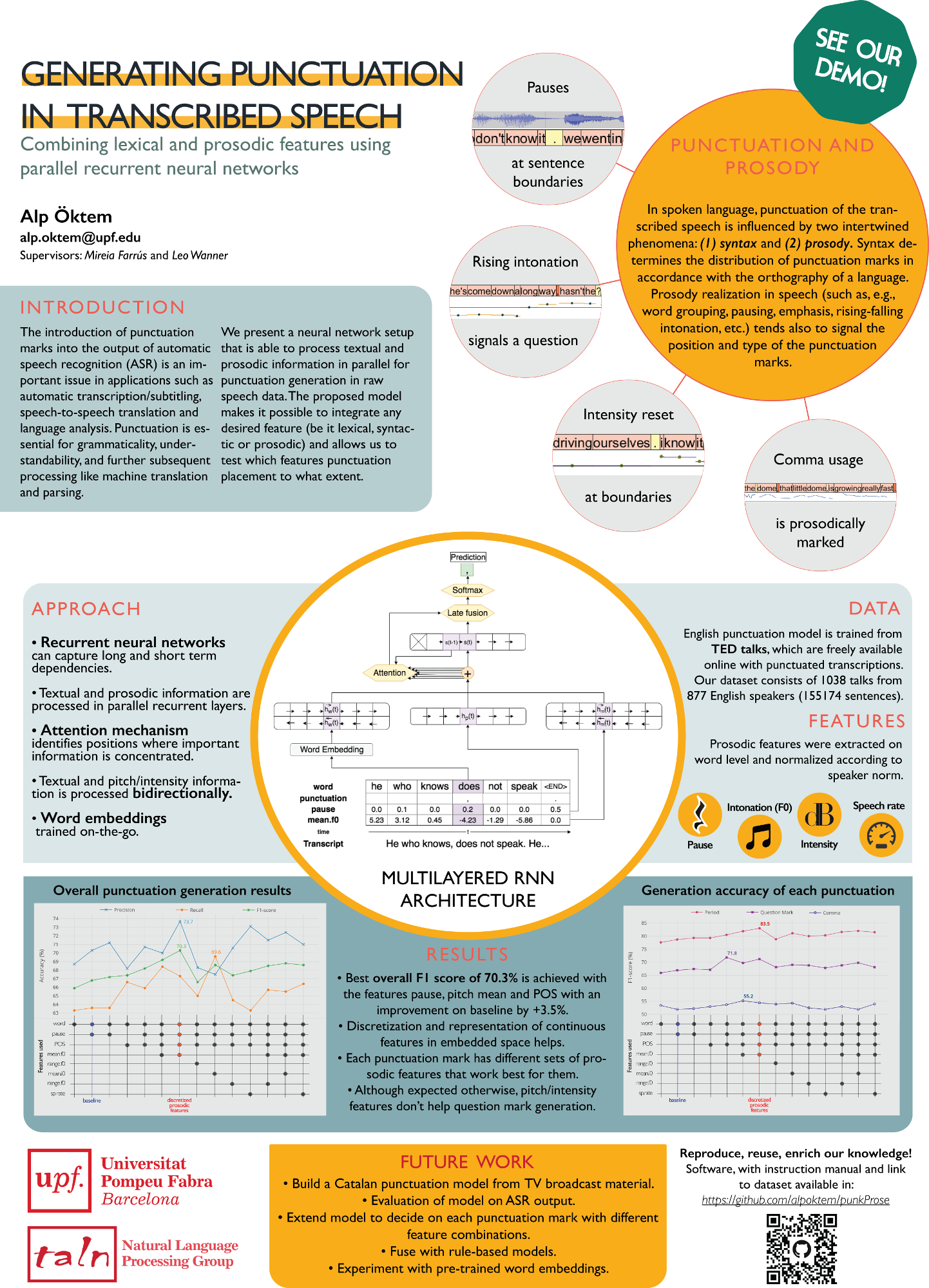

Finally, here’s the poster I designed for the workshop that describes my methodology for prosodic-lexical punctuation restoration. (Special thanks to Lisa Herzog for her artistic touch.)